1. Background

OpenAI markets Sora as a “world simulator” capable of generating minute‑long videos from textual descriptions. While early demos captivated the internet with cinematic quality, experts quickly identified a key limitation: although Sora excels at reproducing textures and motion, it lacks a robust understanding of the physical rules governing these interactions. As one analyst noted:

Sora can draw a chess board and pieces but doesn’t understand anything about the game1

Intrigued by the challenge of evaluating logical understanding in video models, we conducted our own tests even before the release of Google’s paper on puzzle solving.2 Our initial experiments with chess puzzles can be found in our previous post here.

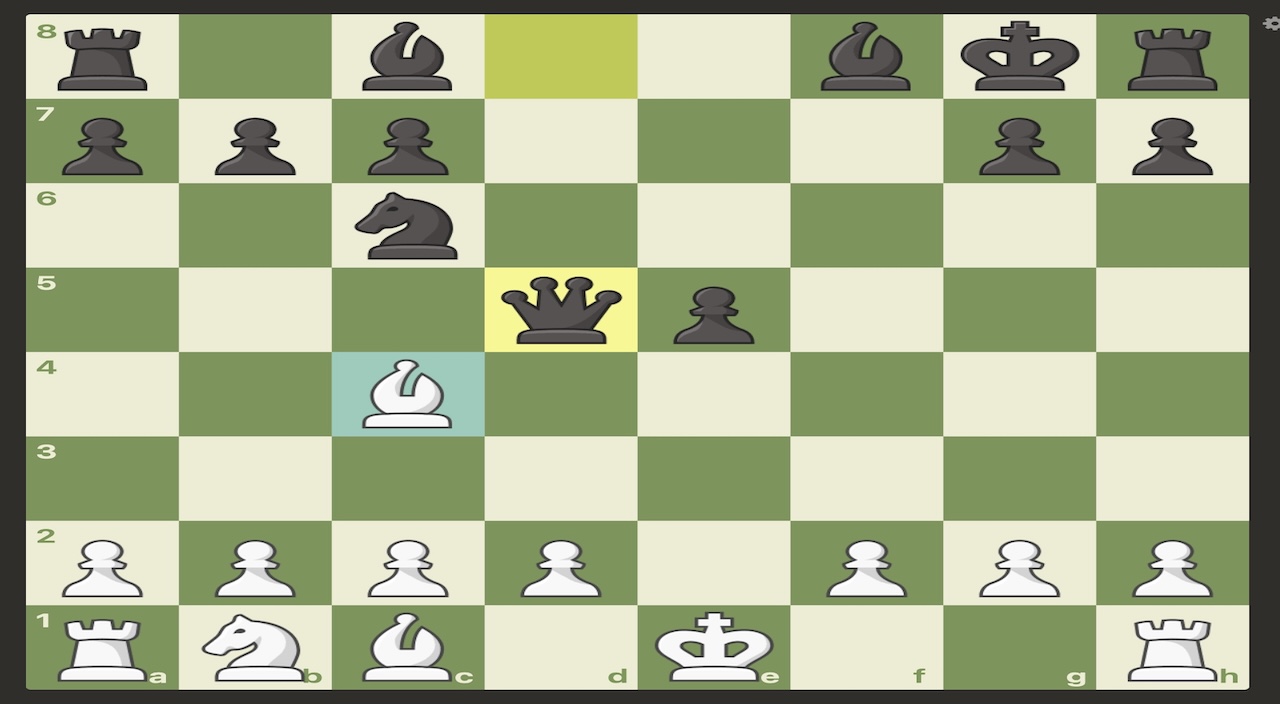

Image generated from

Image generated from ChatGPT Images. Such imprefections in the image (mixing up of position of black rook for example), we saw the same happen for the video model too. This was foreshadowing the results which were yet to come.

2. Methodology

To explore Sora 2’s chess understanding, we generated a series of positions and asked the model to continue the game.

We used the following prompt for the video generation model:

Please play the next best move as black. Please follow the rules of chess.

We replace black in the above prompt with white depending who is to play next move.

We run the experiments only on Sora 2 via the sora.chatgpt.com using the web interface.

We repeated this process for 7 games to explore whether the model’s understanding of chess differs from different color styles of chess board.

We tried the paper using the Google paper techniques to control the leeway using the spinner technique.3

3. Results

3.1 Game 1

The cropping occurs because the defined resolutions for Sora 2 (1280x720 and 720x1280) trigger an internal pipeline that crops the input image to match these dimensions, as per the Sora 2 prompting guide.

3.2 Game 2

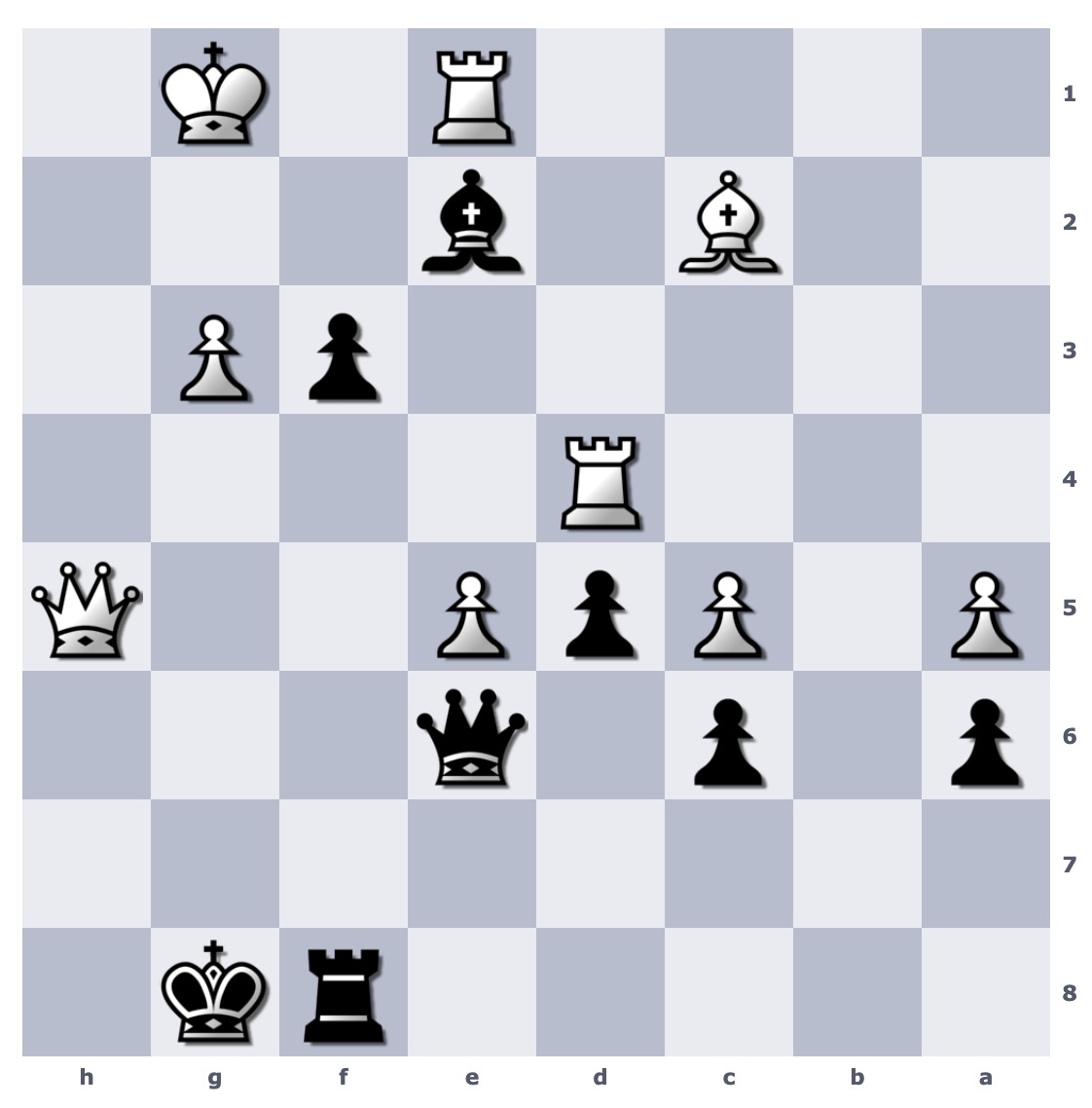

After resizing the input image to a supported resolution, the model successfully plays a valid move. It moves the knight to d3, which is a legal move, though not necessarily the best one. However, subsequent moves in the generated video become invalid.

3.3 Game 3

Using a different board style, the model attempts the next move. We encounter the cropping issue again in both videos.

In the first video, the motion becomes surprisingly fast as the model seems to lose context of the game, rearranging pieces chaotically. It also generates extra bishops after the panning.

In the second video, the model attempts to capture a white piece, but the queen performs an invalid move.

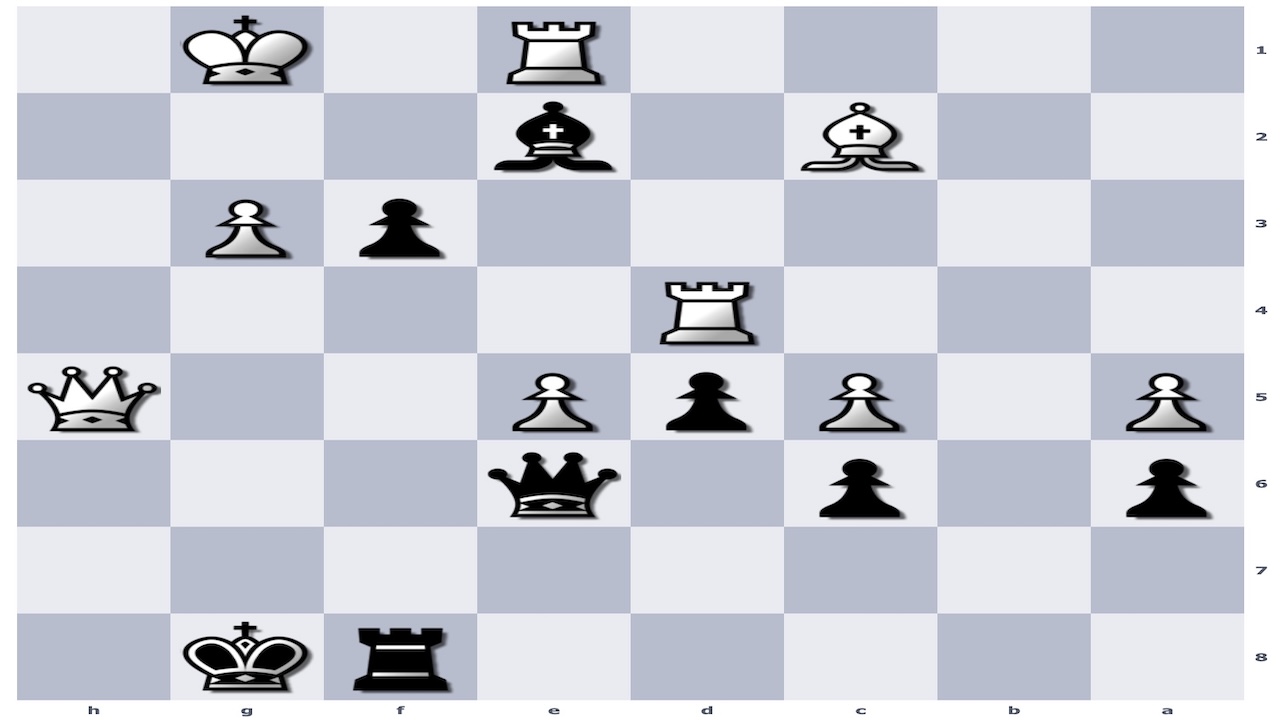

3.4 Game 4

Despite resizing the input image to match the required resolution, the model plays a completely illegal move.

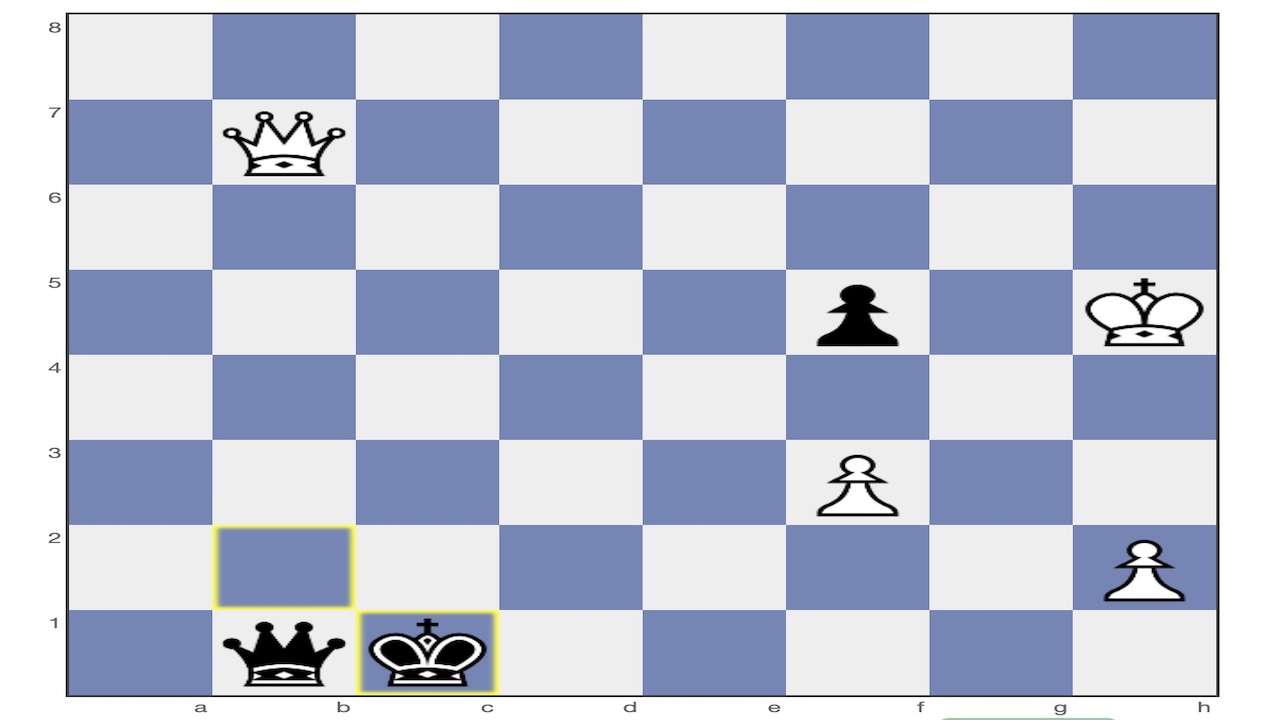

3.5 Game 5

We again resize the input image to a supported resolution.

In the first video, the white queen moves to check the black king at c2. While valid, it is the not best move since the next move the white queen is captured by the black queen. The model also misplaces the queen after the move.

In the second video, we attempt the spinning color wheel technique2 to constrain the model moving random pieces after playing it’s turn. Unfortunately, this does not improve performance; the model fails to play strategically or even legally.

Discussion

Piece Recognition: The model generally distinguishes between black and white pieces and attempts to follow the prompt’s instruction to play as a specific side.

Move Validity: It demonstrates a basic understanding of how pieces move, but this reliability is inconsistent.

Short-Term Planning: The model seems capable of identifying a single “next best move” but fails to maintain a coherent game state or strategy for subsequent moves.

Visual Bias: Certain board color patterns (e.g., yellow/green) seem to yield better results, likely due to their prevalence in the training dataset (resembling popular platforms like chess.com).

Prompt Engineering Limitations: Techniques like the “spinning color wheel”2 did not significantly improve the model’s logical accuracy in this specific domain.

Conclusion

Sora 2 is a triumph of visual synthesis, but it is not a logic engine. It has some understanding of the game of chess but not able to consistently replicate the performance of a chess engine. For tasks requiring strict adherence to logical rules, like chess, math, or physics simulations. Sora generates the dream of chess, not the game itself.

At Nillit AI, we are building contextual models to detect such inconsistencies in videos and generate prefect AI videos which understands the universe of the creator. Follow our journey on LinkedIn.

Citation

This can be cited as:

@misc{nillitai2025sora2chessfail,

title={Sora 2 Chess Fail},

author={Nillit AI Team},

journal={Nillit AI Blog},

year={2025},

month={Dec},

url={https://nillit.ai/blog/sora-2-chess-fail},

}